Final Cut Pro User Guide

- Welcome

-

- What’s new in Final Cut Pro 10.6

- What’s new in Final Cut Pro 10.5.3

- What’s new in Final Cut Pro 10.5

- What’s new in Final Cut Pro 10.4.9

- What’s new in Final Cut Pro 10.4.7

- What’s new in Final Cut Pro 10.4.6

- What’s new in Final Cut Pro 10.4.4

- What’s new in Final Cut Pro 10.4.1

- What’s new in Final Cut Pro 10.4

- What’s new in Final Cut Pro 10.3

- What’s new in Final Cut Pro 10.2

- What’s new in Final Cut Pro 10.1.2

- What’s new in Final Cut Pro 10.1

- What’s new in Final Cut Pro 10.0.6

- What’s new in Final Cut Pro 10.0.3

- What’s new in Final Cut Pro 10.0.1

-

- Intro to effects

-

- Intro to transitions

- How transitions are created

- Add transitions

- Set the default transition

- Delete transitions

- Adjust transitions in the timeline

- Adjust transitions in the inspector and viewer

- Merge jump cuts with the Flow transition

- Adjust transitions with multiple images

- Modify transitions in Motion

-

- Add storylines

- Use the precision editor

- Conform frame sizes and rates

- Use XML to transfer projects

-

- Glossary

- Copyright

How does object tracking work in Final Cut Pro?

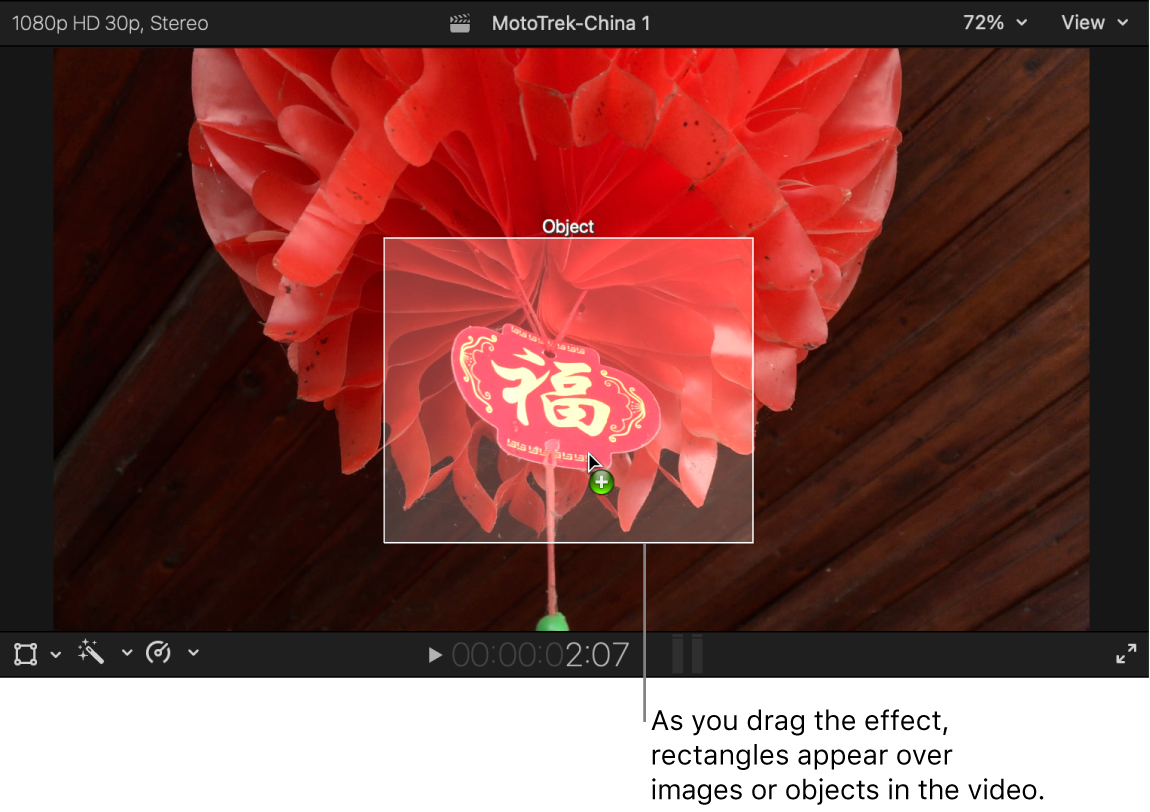

When you drag a clip (such as a title, generator, or still image) or an effect to the viewer, Final Cut Pro suggests an object or area of pixels in the background clip (known as a reference pattern) to “lock onto” as the object moves across the viewer.

Ideally, the reference pattern should be a consistent, easily identifiable detail with high contrast. This makes the pattern easier to track. When you drag the clip or effect to a suggested object, an onscreen tracker appears with controls you can use to adjust the area you want to track.

In the second step of tracking, Final Cut Pro analyzes the motion of the designated reference pattern. Final Cut Pro uses two analysis methods (algorithms) to do the calculations for object tracking:

Point Cloud: The Point Cloud method samples many positions in the search region around the center point of the tracker. Some of those positions fit the designated reference pattern more closely than others; the tracker finds the position where the search region most closely matches the reference pattern (with subpixel accuracy). For every frame analyzed, the tracker assigns a correlation value by measuring how close the best match is.

In addition to searching for the reference pattern’s position, the tracker identifies how the pattern transforms (scales, rotates, or distorts) from one frame to the next. Imagine you’re tracking a logo on the shirtsleeve of a person walking past the camera. If the person turns slightly as they pass the camera, the reference pattern rotates. The tracker looks for the reference pattern and any shifts in that pattern’s scale or rotation.

Machine Learning: Final Cut Pro uses a machine learning model trained on a data set to follow objects in a specified region of video. On systems with an Apple Neural Engine, this portion of the algorithm is accelerated.

The machine learning model draws a bounding box around any object it identifies. This method can recognize people, animals, and many common objects.

As it analyzes motion in your project, Final Cut Pro records the data, which you can then apply to any other item (such as a clip, title, image, or effect shape mask) in your project, effectively creating a motion track.

Download this guide: Apple Books | PDF